NVIDIA HGX B200 & GB200 NVL72 - Reserve capacity now

is a cloud infrastructure services provider specializing in generative AI service delivery and high-performance computing.

betabytes is a cloud infrastructure services provider specializing in generative AI service delivery and high-performance computing.

BetaBytes is a specialized cloud provider for enterprise-scale NVIDIA GPU-accelerated workloads. We deliver sovereign, highly configurable cloud infrastructure providing the power, flexibility, and scalability needed for innovation and handling complex workloads seamlessly.

Our focus on understanding clients' business goals, target audience, and unique challenges.

HIGH-PERFORMING SOLUTIONS

why us

Unlock AI’s potential and lead your industry with our cutting-edge offerings, operational excellence, and high-performing, tailored solutions

BetaBytes offers a comprehensive AI platform designed to meet the technical, operational, financial, and sovereign needs of large enterprises and public sector accounts across the Middle East.

benefits

We are committed to operational excellence, ensuring every aspect of your AI journey is seamless and efficient.

Our approach is unique in its breadth of service offerings. From AI infrastructure to advanced analytics and AI-powered applications, BetaBytes delivers a full spectrum of services to ensure your AI projects succeed.

services

We understand that every AI project is unique. That’s why BetaBytes offers tailored solutions designed to meet your specific needs.

Our secure high-performing infrastructure and customized services ensure that your AI projects run smoothly, and efficiently while in compliance, delivering the results you need to stay ahead of the competition.

mission

Unlock the full potential of AI with BetaBytes’ unparalleled expertise, comprehensive services, and commitment to excellence

Our mission is to power compute-intensive projects with cutting-edge technology and solutions, enabling innovative ideas to reach the market faster and make a significant impact on humanity

Key Features

Scalable and Secure Data Infrastructure

Secure and high-speed data storage solutions with encryption, supporting data privacy and compliance with GDPR, HIPAA, and other regulations.

Advanced Vector Database Solutions

High-performance vector databases designed for efficient similarity searches.

Distributed LLM Serving

Scalable, containerized LLM deployments that ensure high availability and optimal resource use.

End-to-End RAG Pipeline

Comprehensive modular RAG systems with optimized retrieval, generation, and re-ranking components, seamlessly integrating into existing enterprise environments.

Multi-layered Security Framework

End-to-end encryption, advanced access controls, and secure API gateways for safeguarding sensitive data and model access.

Automated Monitoring & Maintenance

Comprehensive logging, real-time performance monitoring, and automated alerts to ensure optimal system performance and quickly resolve issues.

Compliance and Governance Tools

Built-in tools for tracking data lineage, audit logging, and automated compliance checks to adhere to industry-specific regulatory standards.

Efficient Resource Management

Cost-effective resource allocation through auto-scaling capabilities, optimizing computational resources based on demand.

Seamless Integration and Deployment

CI/CD pipelines and API integrations that facilitate smooth RAG and LoRA system deployment within existing enterprise architectures, minimizing operational disruptions.

Explainable AI and Model Governance Framework

Tools for model interpretability and a governance frameworks to ensure responsible AI practices, enhancing transparency and accountability in AI systems deployed in enterprise environments.

Collaborative Environment for Cross-functional Teams

A secure, collaborative workspace enabling seamless collaboration among data scientists, ML engineers, and business stakeholders, bridging the gap between model development and business needs.

State of the art Infrastructure

Top-tier infrastructure for storage, compute, and network components in the industry.

INNOVATIVE APPROACH

OUR APPLICATIONS

Data Analytics

Deep Learning

High-Performance Computing

Large Language Model

Machine Learning

Rendering

PRODUCTS: GPU

NVIDIAH200

Supercharges generative AI as the first GPU with HBM3e, H200’s faster, larger memory fuels the acceleration of generative AI and LLMs while advancing scientific computing for HPC workloads.

MEMORY

MEMORY BANDWIDTH

GPT-3 175B inference

Llama2 70B Inference

HPC Simulation

NVIDIA H200 TENSOR CORE GPU

Key Features

HBM3e Memory

The H200 is the world's first GPU with HBM3e memory, offering 4.8TB/s of memory bandwidth—a 43% increase over the H100—and expanding GPU memory capacity to 141GB, nearly double the H100’s 80GB. This combination significantly enhances data handling capabilities for generative AI and HPC applications. For LLMs like GPT-3, the increased memory capacity provides up to 18X higher performance than the original A100, and 11X faster performance than the H100.

Unmatched Performance

Delivers 32 petaflops of FP8 deep learning compute, making it ideal for the most demanding AI applications. The H200 also triples the floating-point operations per second (FLOPS) of double-precision Tensor Cores, delivering 67 teraFLOPS of FP64 Tensor Core computing for HPC. AI-fused HPC applications can leverage the H200’s TF32 Tensor Core precision to achieve nearly one petaFLOP of throughput for single-precision, matrix-multiply operations, with zero code changes.

Advanced Architecture

Built on the NVIDIA Hopper™️ architecture, the H200 ensures perpetual performance improvements with future software updates.

Compatibility

Fully compatible with existing HGX H100 systems, allowing for seamless integration and performance upgrades without infrastructure changes.

Versatile Deployment

Suitable for various data center environments, including on-premises, cloud, hybrid-cloud, and edge deployments.

NVIDIA H200 TENSOR CORE GPU

TECH SPECS

GPU Memory

141GB

GPU Memory Bandwidth

4.8TB/s

FP8 Tensor Core Performance

4 PetaFLOPS

Form Factor

SXM | PCIe

Server Options

NVIDIA HGX H200 partner and NVIDIA-certified systems with 4 or 8 GPUs

Nvidia Enterprise 5.0

Included

PRODUCTS: ACCELERATED COMPUTING PLATFORM

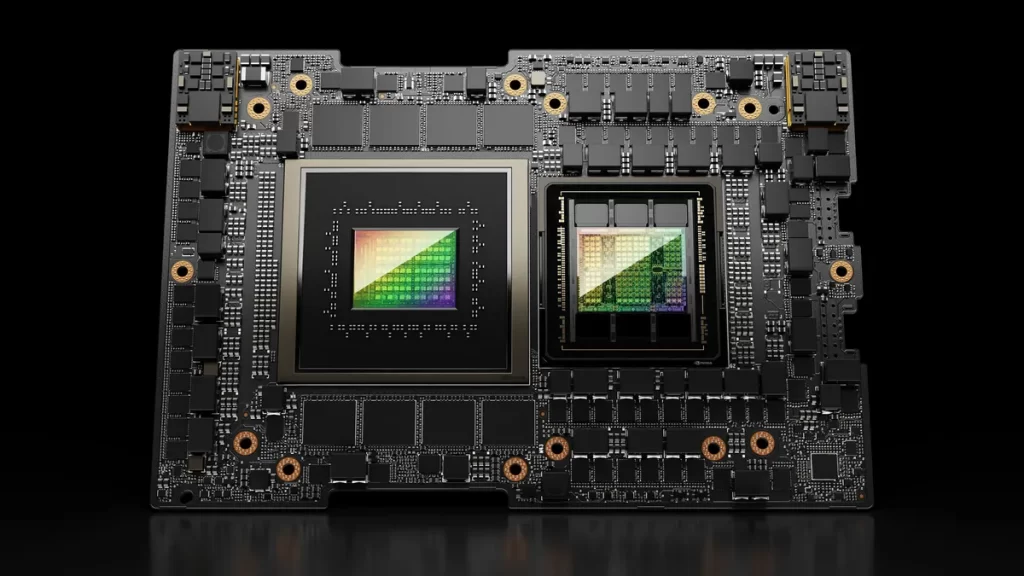

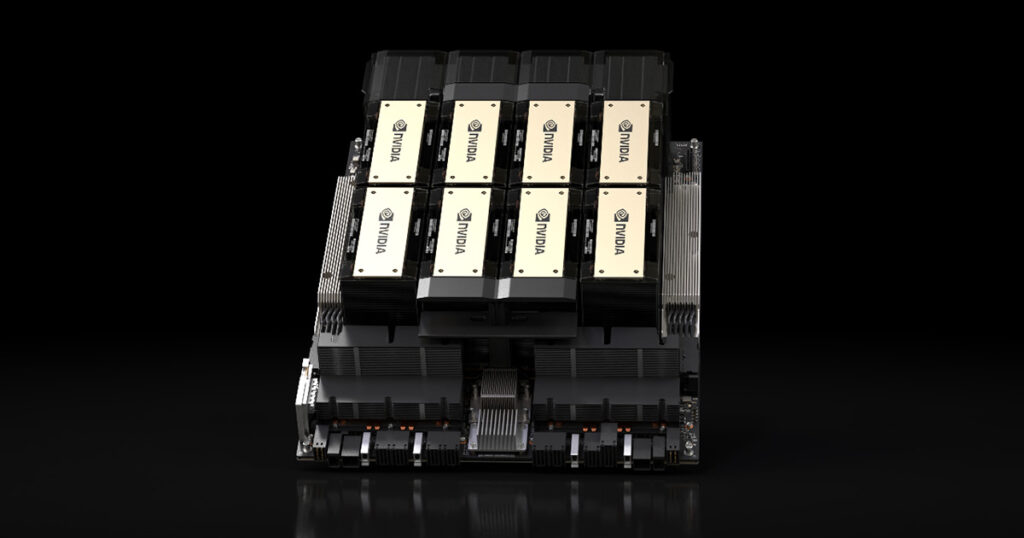

NVIDIAHGX200

The NVIDIA HGX H200™️ AI supercomputing platform brings together the full power of NVIDIA GPUs, NVLink®️, NVIDIA networking, and fully optimized AI and high-performance computing (HPC) software stacks to provide the highest application performance and drive the fastest time to insights.

GPUs

Tensor Core

Bandwidth

Agg. Bandwidth

NVIDIA HGX 200 COMPUTING PLATFORM

Key Features

Scalable Configuration

Available in four- and eight-way configurations to meet diverse computing needs.

Eight-Way Configuration

An eight-way HGX H200 provides over 32 petaflops of FP8 deep learning compute and 1.1TB of aggregate high-bandwidth memory.

Seamless Integration

Compatible with existing HGX H100 systems, facilitating easy upgrades.

NVIDIA NVLink and NVSwitch

High-speed interconnects enable the creation of powerful scale-up servers, ensuring efficient communication between multiple GPUs.

NVIDIA HGX 200 COMPUTING PLATFORM

TECH SPECS

INT8 Tensor Core

32 POPS

FP16/BFLOAT16 Tensor Core

16 PFLOPS

TF32 Tensor Core

8 PFLOPS

FP32

540 TFLOPS

PRODUCTS: SOFTWARE

NVIDIA AI ENTERPRISE 5.0

NVIDIA®️ AI Enterprise is an all-encompassing, secure AI software platform designed to accelerate the data science pipeline and streamline the development and deployment of AI in production environments. As an end-to-end solution, it offers enterprises a robust, stable, and cloud-native platform packed with over 100 frameworks, pretrained models, and tools, covering a wide range of AI applications including generative AI, computer vision, and speech AI.

Data Processing

Cheaper Operations

Acceleration

Frameworks

NVIDIA AI ENTERPRISE 5.0 SOFTWARE PLATFORM

Benefits for enterprises

Secure + Stable Platform

AI including generative AI, computer vision, speech AI and more. This secure, stable, cloud-native platform of AI software includes over 100 frameworks, pretrained models, and tools.

Data preparation

Improve data processing time by up to 5 times while reducing operational costs by 4 times with the NVIDIA RAPIDS Accelerator for Apache Spark.

AI Training

Create custom, accurate models in hours, instead of months, using NVIDIA TAO Toolkit and pretrained models.

Optimization for Inference

Accelerate application performance up to 40 times over CPU-only platforms during inference with NVIDIA TensorRT.

Deployment at Scale

Simplify and optimize the deployment of AI models at scale and in production with NVIDIA Triton Inference Server.

NVIDIA AI ENTERPRISE 5.0 SOFTWARE PLATFORM

Top Use Cases

LLM/Generative AI with Nemo

Extract, transform, and load(ETL)/Data Processing with RAPIDS Accelerator for Apache Spark

Inference with TensorRT, Triton Inference Server and Triton Management Service

Speech and Translation AI with Riva

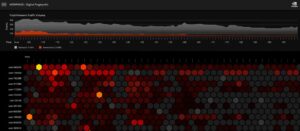

Cybersecurity with Morpheus

Healthcare with MONAI and Parabricks